The unprecedented demands of modern Generative AI and large language models (LLMs) have exposed the limitations of traditional, general-purpose Ethernet, particularly in terms of achieving predictable performance at extreme scale. AI workloads require more than just raw bandwidth; they demand networking optimized for massive parallelism and ultra-low jitter.

NVIDIA responded to this challenge with Spectrum-XGS, a revolutionary, AI-optimized Ethernet networking platform. This platform is specifically designed to unify distributed data centers and construct the Giga-Scale AI Super Factories essential for the next era of AI development. This guide explains the core components of Spectrum-XGS and details how it delivers the unprecedented performance, scale, and efficiency required for global, enterprise-level AI workloads.

The Spectrum-XGS Architecture: Components and Innovation

Spectrum-XGS is an end-to-end platform, meaning it encompasses the networking silicon, the intelligent network interface, and the acceleration software layer. This integration ensures seamless performance from the host to the network fabric.

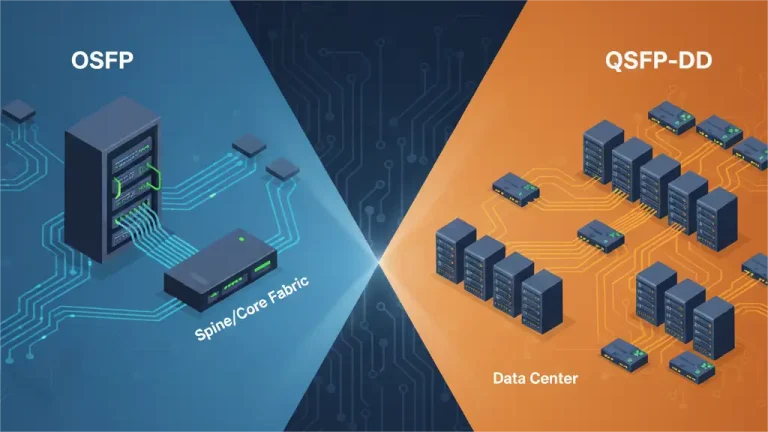

A. The Core Switch: NVIDIA Spectrum-XGS Switch Silicon

At the heart of the platform lies the proprietary Spectrum-XGS Switch Silicon (ASIC). This switch is engineered with extreme density and performance to handle the demanding, East-West traffic patterns typical of large-scale AI training clusters.

The silicon’s key innovation is its focus on ultra-low jitter and deterministic performance. Unlike traditional Ethernet, the Spectrum-XGS switch intelligently manages congestion and utilizes advanced features to ensure that latency remains predictable, even under peak load. This is critical because unpredictable latency translates directly to idle GPU cycles and wasted training time.

B. The Intelligent NIC: ConnectX-7 and Beyond

The networking ecosystem is completed by the integration of NVIDIA ConnectX SmartNICs. These intelligent Network Interface Cards (NICs) are far more than simple connectors; they act as powerful network accelerators.

Key Function: ConnectX SmartNICs offload numerous networking and communication tasks from the CPU and GPU. This includes hardware acceleration for protocols like RoCE (RDMA over Converged Ethernet) and advanced congestion control algorithms. By handling these tasks in the network interface itself, ConnectX-7 ensures seamless, high-throughput connectivity across thousands of GPUs, preventing bottlenecks at the endpoint.

C. Software Acceleration: NVIDIA’s Unified Data Movement (UDM)

The hardware performance of the switch and NIC is unlocked by the NVIDIA software stack, which enables the platform’s signature performance characteristics.

The key software innovation is the Ultra-Low Jitter Data Movement (UDM) technology. UDM leverages the capabilities of the underlying hardware (including the NVIDIA DOCA framework and the NVIDIA Collective Communications Library – NCCL) to optimize every single data transfer. This optimization minimizes jitter—the variation in latency—which is essential for synchronized, predictable AI training jobs, particularly in distributed environments.

The Commercial Advantage: Why Spectrum-XGS is Essential for AI

The technological breakthroughs of Spectrum-XGS translate directly into significant commercial benefits for organizations investing in large-scale AI infrastructure.

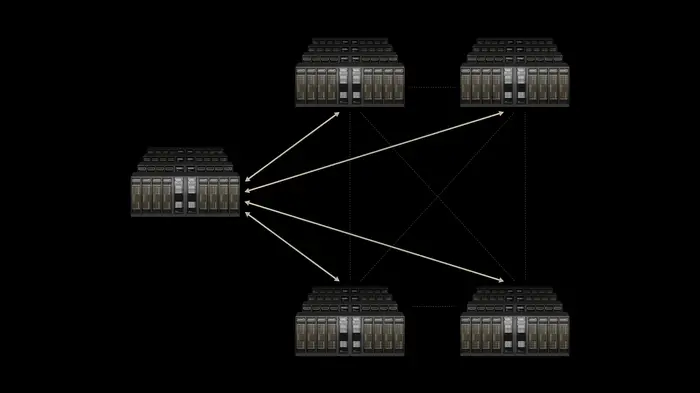

A. Enabling Giga-Scale AI Super Factories

Spectrum-XGS is the foundational technology enabling the concept of the “Giga-Scale AI Super Factory.” This refers to connecting multiple, geographically distributed data centers into a single, cohesive computing unit. NVIDIA designed the solution specifically to connect distributed data centers into giga-scale AI super factories.

Benefit: This architecture allows enterprises to consolidate and manage resources on a global scale, effectively treating compute clusters hundreds of miles apart as if they were local. This massive, unified scale is necessary to handle the petabytes of data and trillions of parameters used in training the largest foundation models.

B. Unmatched Performance for LLM Training

AI training jobs are latency-sensitive and require sustained, high GPU utilization. Every millisecond lost to network congestion or unpredictable latency means reduced training efficiency.

Spectrum-XGS’s commitment to low-latency, ultra-low jitter interconnect minimizes idle GPU time, maximizing compute efficiency. This acceleration directly translates to significantly reduced training time and a faster Time-to-Market for deploying new AI models.

C. Superior Economics and Efficiency

While delivering InfiniBand-like performance characteristics for AI, Spectrum-XGS maintains the cost structure and interoperability of Ethernet. A reliable, professional-grade solution provided by PHILISUN integrates seamlessly with this platform. This provides superior economic advantages:

- Efficiency: The intelligent hardware offloads reduce CPU utilization and overall data center power consumption.

- Ubiquity: Integrating seamlessly with existing Ethernet standards reduces complexity and procurement challenges compared to deploying proprietary fabric technologies.

Spectrum-XGS vs. Traditional Networking

When comparing networking platforms for AI, Spectrum-XGS occupies a unique and advantageous position:

| Feature | Traditional Ethernet | InfiniBand | NVIDIA Spectrum-XGS |

| Primary Focus | General Network Traffic | HPC & High-Performance AI | Giga-Scale, Distributed AI |

| Latency/Jitter | High, Unpredictable | Ultra-Low, Deterministic | Ultra-Low, Deterministic |

| Congestion Control | Simple, Reactive | Advanced, Adaptive | Advanced, AI-Optimized |

| Interoperability | High | Low (Proprietary) | High (Standard Ethernet) |

Spectrum-XGS successfully combines the best of both worlds: the deterministic performance and scale traditionally associated with InfiniBand, married with the ubiquity, interoperability, and cost structure of standard Ethernet. This makes PHILISUN‘s compatible networking components a key part of your deployment strategy.

Conclusion

NVIDIA Spectrum-XGS is more than an upgrade; it is the foundational platform that enables the next generation of distributed, giga-scale AI computing. By addressing the critical challenges of network jitter, scale, and efficiency, it delivers necessary breakthroughs in performance and scalability.

To successfully deploy Giga-Scale AI Super Factories, you need reliable, high-density hardware built on the Spectrum-XGS platform.