What Is The Difference Between NDR And HDR InfiniBand?

AI, machine learning, and High-Performance Computing (HPC) demand zero tolerance for latency and maximum data throughput. The network fabric connecting GPUs and CPUs is arguably the most critical component, and InfiniBand consistently delivers the required low-latency performance.

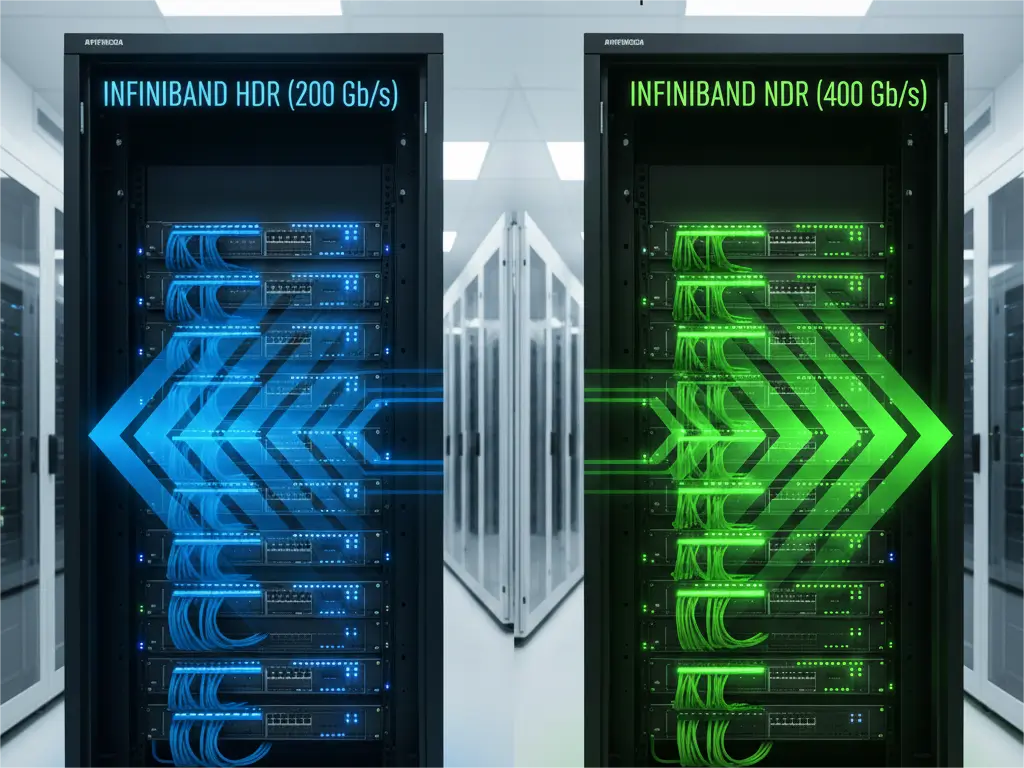

The transition from HDR (High Data Rate, 200Gb/s) to NDR (Next Data Rate, 400Gb/s) is more than a simple speed upgrade; it represents a fundamental architectural change. This shift is necessary to support the scaling requirements of massive data sets and increasingly complex Generative AI models. This technical deep dive compares the two standards and clarifies the critical hardware implications for system architects.

This technical analysis breaks down the core differences, focusing on the architectural, performance, and physical layer aspects of both standards.

InfiniBand HDR (200G): The Proven Standard

High Data Rate (HDR) InfiniBand represents the previous generation standard, still offering world-class performance for large-scale HPC and AI workloads.

Core HDR Specifications

HDR achieves its 200Gb/s port speed by utilizing four data lanes, each running at 50Gb/s using PAM4 (Pulse Amplitude Modulation 4-level) signaling technology.

| Feature | Specification |

| Port Bandwidth | 200 Gb/s |

| Signaling | 4 x 50G lanes (PAM4) |

| Transceiver Form Factor | QSFP56 |

HDR Switch Architecture (NVIDIA Quantum)

The backbone of HDR networks is the NVIDIA Quantum switch platform (e.g., QM8700 series). These switches provide high-density fabrics, supporting up to 40 ports of 200G and delivering an aggregate switching capacity of approximately 16Tb/s. This architecture provides the necessary bandwidth and reliable low-latency performance for scaling mid-to-large-sized GPU clusters.

InfiniBand NDR (400G): Accelerating Modern AI

Next Data Rate (NDR) InfiniBand is the current state-of-the-art, specifically designed to unlock the full potential of NVIDIA’s latest generation of accelerated computing infrastructure.

Core NDR Specifications

NDR achieves a dramatic bandwidth increase by doubling the lane speed from 50Gb/s to 100Gb/s. By maintaining four data lanes, the total port bandwidth reaches 400Gb/s.

| Feature | Specification |

| Port Bandwidth | 400 Gb/s |

| Signaling | 4 x 100G lanes (PAM4) |

| Transceiver Form Factor | OSFP or QSFP112 |

Architectural Leap: NVIDIA Quantum-2 and In-Network Computing

NDR’s superior performance is rooted in the NVIDIA Quantum-2 switch architecture. Quantum-2 features up to 64 ports of 400G, delivering an unprecedented 51.2Tb/s of aggregate bidirectional throughput. This density allows for significantly flatter topologies and fewer network hops. (Reference: NVIDIA InfiniBand Switching)

Crucially, Quantum-2 introduces enhanced In-Network Computing capabilities. The switch ASIC actively processes data as it flows, offloading computationally intensive collective operations (like Allreduce and Broadcast) from the GPUs. This optimization dramatically reduces CPU overhead, slashes data reduction times, and is indispensable for the performance scaling of large-scale, distributed AI training jobs utilizing the latest H100 GPUs.

Direct Comparison: HDR vs. NDR Key Differences

The following table summarizes the key differentiators between the two InfiniBand standards across core technical domains:

| Feature | HDR (200G) | NDR (400G) | Key Improvement |

| Bandwidth | 200 Gb/s | 400 Gb/s | Doubled throughput. |

| Lane Speed | 50 Gb/s | 100 Gb/s | Doubled the electrical signaling rate. |

| Switch Generation | NVIDIA Quantum | NVIDIA Quantum-2 | Architectural advances and higher port density. |

| In-Network Computing | Basic offload capabilities. | Advanced offload for collective operations (Quantum-2). | Reduces GPU idle time. |

| Form Factor | QSFP56 | OSFP / QSFP112 | Better thermal management for higher power optics. |

| Target Workload | Traditional HPC, standard ML training. | LLMs, Generative AI, and Hyperscale distributed computing. |

Use Case Analysis: When to Upgrade

The decision to deploy HDR or NDR depends entirely on the workload, budget, and desired scale. This section helps system architects determine the most suitable standard for their current and future computing needs.

| Use Case | Recommended Standard | Rationale |

| Massive LLM Training | NDR (400G) | Essential for H100/H200 cluster performance and leveraging In-Network Computing benefits for scale. |

| Existing A100 Clusters | HDR (200G) | A mature, cost-effective solution that still provides excellent throughput for this specific GPU generation. |

| Future-Proofing & Scale | NDR (400G) | Provides a significantly flatter topology (fewer switches needed) and capacity headroom for expansion over the next 3-5 years. |

| Budget-Sensitive HPC | HDR (200G) | Lower initial cost for hardware and cabling while maintaining industry-leading low latency for smaller clusters. |

The Physical Layer: Optics and Cabling Requirements

The increase in speed and power associated with NDR necessitates different physical components. Choosing the right interconnects is critical for signal integrity and sustained reliability at 400G and above.

Transceivers: OSFP vs. QSFP56

The shift from 50G to 100G signaling significantly increases the power consumption and heat generation of the optics.

- HDR (200G) utilizes the widely adopted QSFP56 form factor.

- NDR (400G) primarily adopts OSFP or QSFP112. These larger modules are designed to manage the higher thermal loads and power requirements, ensuring stability and signal integrity at 400G and 800G speeds.

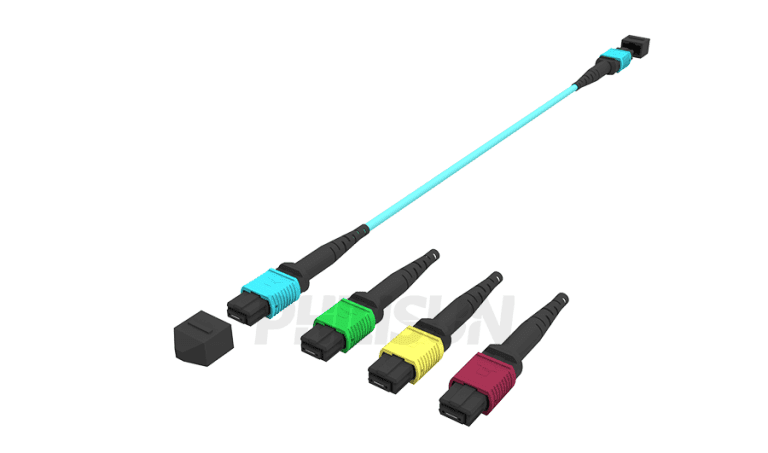

Fiber Connectivity: The Need for MPO-16

The highest density links, such as 800G InfiniBand, are physically realized as two 400G channels operating in parallel.

- HDR (200G) typically uses MPO-12 based fiber connections (4 transmitting fibers and 4 receiving fibers).

- NDR (400G/800G) deployments require higher-density cabling. For 800G links, MPO-16 connectors are essential to accommodate the 8 transmitting and 8 receiving fiber pairs needed for dual 400G channels.

PHILISUN Interconnects: We supply validated, high-performance NDR 400G AOCs that meet the stringent low-BER requirements of NVIDIA environments.

Conclusion

InfiniBand NDR is not merely an incremental speed bump; it is an architectural necessity driven by the demands of next-generation AI and supercomputing. The combination of 400G bandwidth and the deep In-Network Computing capabilities of the NVIDIA Quantum-2 switch fabric delivers a competitive advantage critical for accelerating LLM training and data processing.

Ensure your significant investment in Quantum-2 and modern GPUs is supported by compliant, high-integrity interconnects.

Contact PHILISUN‘s technical specialists today for a detailed Bill of Materials (BOM) consultation, and secure the validated 400G AOCs and MPO-16 fiber solutions required for your reliable InfiniBand NDR deployment.

Frequently Asked Questions (FAQ)

Q1: Are NDR and HDR InfiniBand directly compatible?

- A: No. They use different physical modules (OSFP/QSFP112 vs. QSFP56) and signaling standards (100G vs. 50G per lane). Direct connections require specialized breakout cables or adapters.

Q2: Does NDR 400G have lower latency than HDR 200G?

- A: Yes, typically. While the signaling complexity is higher, NDR’s system-level latency is often lower due to the flatter Quantum-2 network topology and the significant reduction in collective operation processing time enabled by In-Network Computing.

Q3: What is the primary role of In-Network Computing in NDR?

- A: It offloads data aggregation and reduction tasks (collective operations) from the GPUs to the Quantum-2 switch ASIC, freeing up expensive GPU cycles and dramatically accelerating distributed training jobs, especially for LLMs.