The modern enterprise data center is no longer defined by racks of servers; it is defined by its network architecture and its ability to scale computation without compromising latency. Two foundational principles now govern all design decisions: the Spine-Leaf topology and the ubiquitous deployment of fiber optics. This synergy ensures the low, predictable latency required for critical applications, AI workloads, and distributed storage. For IT managers, understanding how to integrate high-density fiber components into this architectural framework is crucial for building a resilient and cost-effective digital core.

Defining the Modern Enterprise Data Center Landscape

The current enterprise facility is built to achieve agility and performance levels previously reserved for cloud providers, requiring a strategic approach to infrastructure design.

Core Function vs. Colocation/Cloud Facilities

An enterprise data center is typically a privately owned and operated facility, granting the owner complete control over security, compliance, and customization. This contrasts sharply with shared hyperscale or colocation centers. Enterprise control allows for granular optimization of the network fabric to support specific business applications, such as high-frequency trading platforms or proprietary AI development environments, demanding network designs built for deterministic performance rather than generalized scale.

The Infrastructure Triad: Compute, Storage, and Networking

A modern facility balances three integrated components, all reliant on the network for cohesion:

- Compute: Servers, often highly virtualized or specialized with GPUs for accelerating machine learning.

- Storage: High-speed flash arrays (NVMe) and scalable storage area networks (SANs) that demand exceptionally low latency from the network.

- Networking: The high-speed fabric linking them all. This layer is now the primary determinant of application performance and is where investment is concentrated to avoid bottlenecks.

Network Architecture and Fabric Design

The shift from legacy Three-Tier networks (Core, Aggregation, Access) to the Spine-Leaf design is mandatory for achieving predictable throughput in high-performance enterprise environments.

The Shift to Spine-Leaf Topology

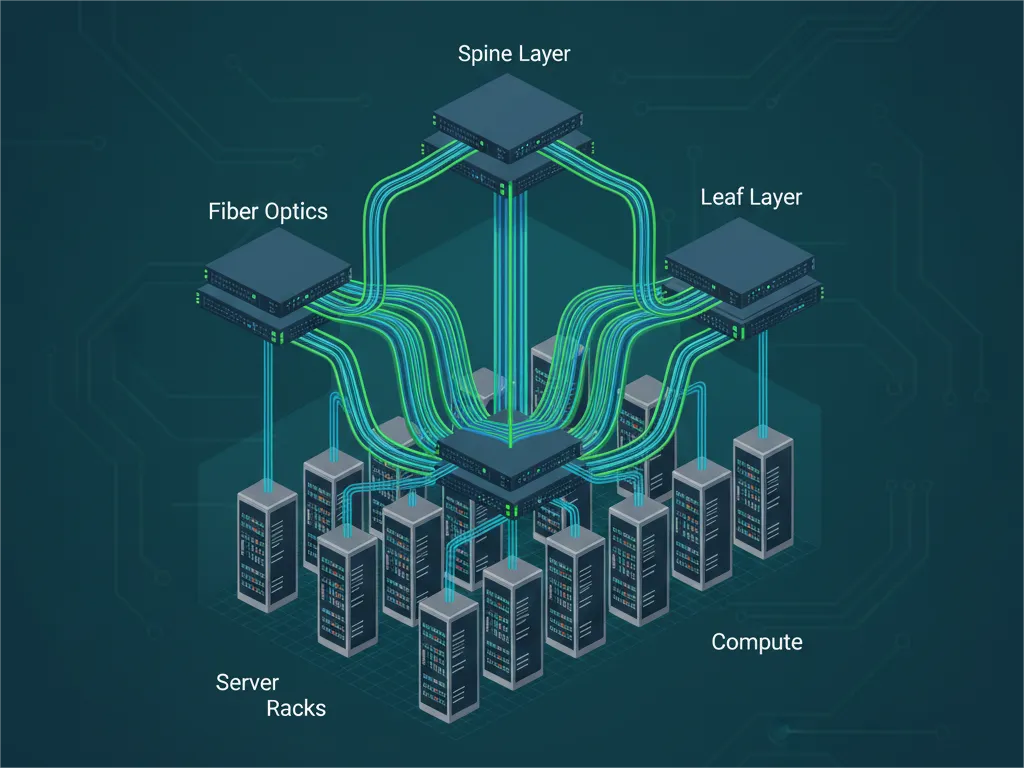

The Spine-Leaf architecture (a variant of the Clos network) consists of two main tiers connected in a non-blocking mesh:

- Leaf Switches: Connect all end devices (servers, storage, firewalls). The Leaf layer aggregates traffic and is responsible for routing decisions and enforcing policy.

- Spine Switches: Interconnect every Leaf switch with every other Leaf switch. The Spine layer acts as a high-capacity, low-latency backplane.

This topology fundamentally changes traffic patterns, prioritizing East-West traffic (server-to-server communication within the data center) and guaranteeing that any server can communicate with any other server with maximum two hops, ensuring low, predictable latency.

High-Speed Interconnect Strategy (400G and Beyond)

To handle the immense East-West traffic load, link speeds must be maximized. This involves deploying 400G and, increasingly, 800G optical transceivers to link the Leaf and Spine switches. High-speed links require flawless signal integrity, achieved through the use of high-quality Single Mode (OS2) fiber and precision optics. Selecting reliable, low-power optics is key to maintaining a sustainable operational TCO. PHILISUN specializes in providing the certified 400G QSFP-DD and 800G OSFP transceivers and cabling necessary to maintain signal integrity across these critical Spine-Leaf connections.

Networking Protocols

The fabric relies on modern protocols to virtualize and prioritize traffic: VXLAN is commonly used for network virtualization and multi-tenancy, while RoCE (RDMA over Converged Ethernet) and its derivatives are critical for low-latency storage access and GPU-to-GPU communication. These protocols are highly sensitive to network jitter and loss, reinforcing the absolute necessity of a pristine fiber environment.

Physical Infrastructure and Scalability

Effective physical infrastructure planning is what enables the high performance promised by the logical network design.

Power and Cooling Considerations (PUE and Hot/Cold Aisle Containment)

The high-density nature of modern compute racks, coupled with the power requirements of high-speed optics, places extreme demands on facility resources. Efficiency is tracked via PUE (Power Usage Effectiveness). Strategic cooling solutions, such as hot/cold aisle containment, in-row cooling units, and targeted liquid cooling for GPU racks, are essential for mitigating thermal output and maintaining hardware stability.

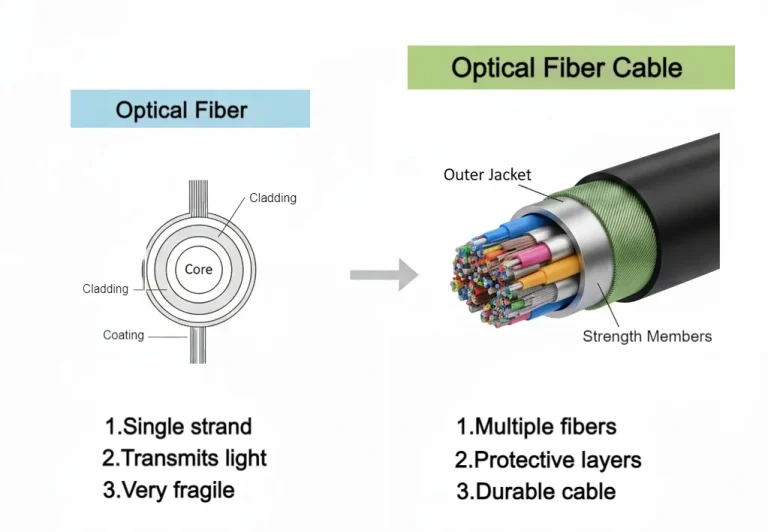

Cabling Management and Density (The Role of MPO/MTP)

The transition to Spine-Leaf significantly increases the total number of fiber ports, as every Leaf must connect to every Spine. Managing thousands of individual fiber links efficiently is impossible without high-density solutions. MPO/MTP connectors, which bundle 12 or 16 fibers into a single plug, are essential for clean, manageable, and scalable fiber distribution. PHILISUN provides MPO/MTP assemblies that simplify deployment, reduce installation time, and minimize human error in large enterprise environments.

Physical Security and Compliance Requirements

For private enterprise facilities, physical infrastructure must strictly adhere to industry-specific regulatory standards (e.g., HIPAA, GDPR, SOC 2). This mandates rigorous physical access control layers, comprehensive video surveillance, strict environmental monitoring, and resilient fire suppression systems, all integrated into a unified management platform.

Conclusion

The successful enterprise data center is defined by its ability to integrate the Spine-Leaf architecture with a high-performance fiber optic strategy. This combination delivers the predictable, low-latency fabric essential for modern AI and storage workloads. By prioritizing certified components and high-density cabling, IT architects can maximize efficiency and ensure the network infrastructure is ready for the demands of 400G and beyond.

For end-to-end optical and cabling solutions built for the Spine-Leaf architecture, explore the complete range of PHILISUN Data Center Solutions.

FAQ

Q1: What is the main advantage of the Spine-Leaf topology?

A1: It guarantees low, predictable latency and high bisectional bandwidth for all server-to-server traffic with a maximum of two network hops.

Q2: What is PUE, and what is the ideal goal?

A2: PUE (Power Usage Effectiveness) measures how efficiently a data center uses energy. The goal is 1.0, but closer to 1.2 is considered excellent efficiency.

Q3: Why is MPO cabling essential in Spine-Leaf fabrics?

A3: It provides the high fiber density (12-16 fibers per connector) needed to efficiently connect thousands of ports required for non-blocking architectures.

Q4: What is the core function of the Leaf switch layer?

A4: To connect end devices (servers, storage) and aggregate all traffic toward the Spine switches.

Q5: What does “enterprise” typically mean in this context?

A5: A private, corporate-owned and operated facility, built for specific organizational needs, unlike a hyperscale or colocation center.