NVIDIA LinkX Ecosystem: Technical Analysis and Compatible 400G/800G Strategic Sourcing

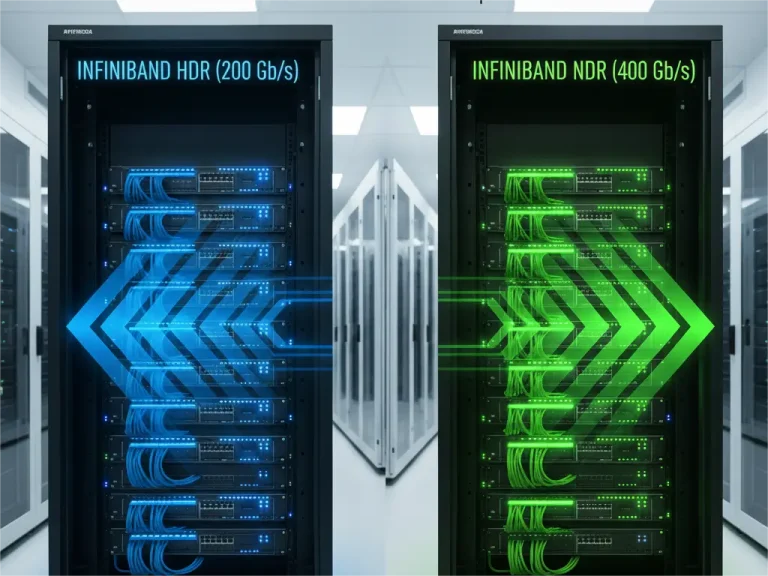

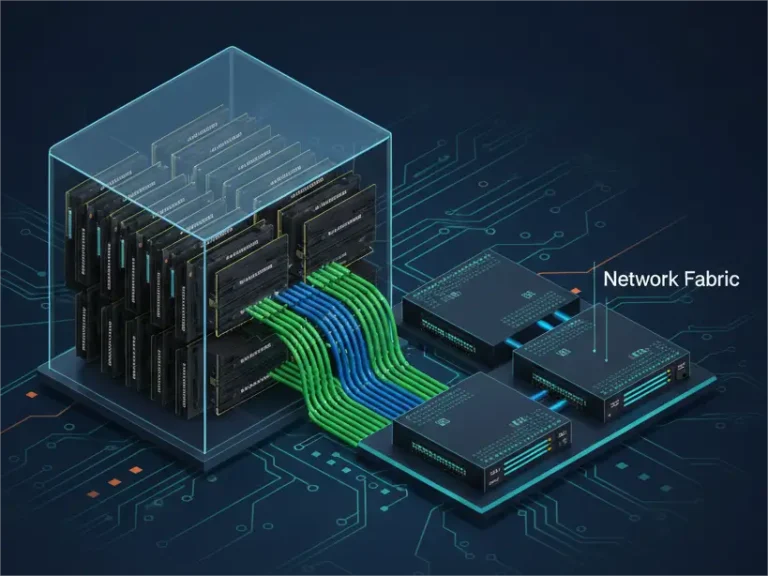

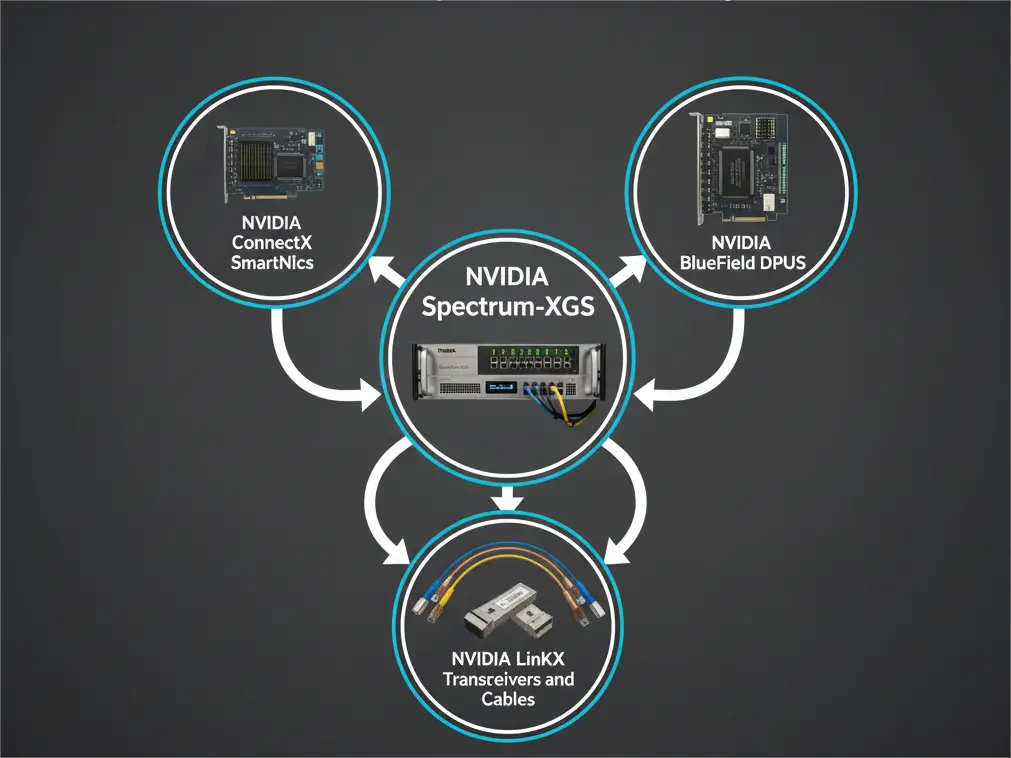

The computational power of modern AI and High-Performance Computing (HPC) clusters is now fundamentally constrained by the efficiency of the Interconnect Fabric. Within the NVIDIA ecosystem, LinkX is the critical technical standard for high-speed connectivity. It represents a suite of NVIDIA-certified products, including DACs, AOCs, and transceivers. These components are engineered to deliver the ultra-low latency and massive bandwidth essential for InfiniBand and high-speed Ethernet fabrics (HDR, NDR, XDR) under peak load. LinkX serves as the certified bridge between DGX compute elements and Spectrum-X networking equipment. It ensures cluster-wide stability.

The Technical Imperative: LinkX Compliance and Failure Points

The core function of LinkX is to guarantee cluster stability. This is especially true as the network rapidly accelerates toward 800G NDR/XDR speeds. Every optical module must pass rigorous electrical and optical scrutiny. This is necessary to maintain microsecond latency and an extremely low Bit Error Rate (BER). Certified performance is not discretionary; strict compliance checks are integrated directly into the NVIDIA hardware.

The Cost of Non-Compliance

Employing uncertified or substandard transceivers introduces two major critical points of failure. Even if the components appear functional, these risks exist:

Device Initialization Failure

NVIDIA devices—ConnectX NICs and Spectrum switches, in particular—use a validation mechanism. They read the internal EEPROM (Electrically Erasable Programmable Read-Only Memory) chip within the interconnect module. This action verifies its vendor ID, model number, and critical electrical parameters. If this data does not match the expected LinkX profile, the device often refuses to initialize the module. This leaves the port inactive or disabled by the cluster management software.

Silent Performance Degradation

If a module manages to link up, poor signal integrity (SI) still results in a high number of transmission errors. These errors necessitate heavy reliance on Forward Error Correction (FEC) mechanisms for data accuracy. While FEC guarantees data integrity, it drastically increases end-to-end latency and reduces effective throughput. This accumulated latency can cripple the performance of the entire supercomputer. It negatively impacts large-scale, tightly coupled AI training jobs that rely on collective communication.

400G and 800G Form Factors in the Ecosystem

AI model sizes continue to grow exponentially. As a result, interconnect bandwidth has rapidly accelerated from 400G to 800G. This change brings the widespread adoption of two key high-density optical module form factors: QSFP-DD and OSFP.

QSFP-DD (Quad Small Form-factor Pluggable Double Density)

This form factor doubles the electrical interface lanes from 4 pairs to 8 pairs. It is based on the standard QSFP footprint. It achieves 400G (8x50G) and 800G (8x100G) bandwidth. It also maintains a relatively small physical size. It is often favored for its backward compatibility with legacy QSFP28 modules. This offers flexibility during cluster upgrades.

OSFP (Octal Small Form-factor Pluggable)

The OSFP is physically larger than the QSFP-DD. It was specifically designed for higher power dissipation and density. It supports 8 pairs of electrical lanes. It is a primary standard adopted by NVIDIA for the 800G (NDR) generation. It is also poised to support future 1.6T (XDR) speeds. Its enhanced thermal management capabilities are crucial for handling the increased power consumption of 800G optics, which often exceed 18 Watts.

Understanding these form factors and their strategic deployment within the NVIDIA architecture is the cornerstone of effective procurement planning.

Deep Dive into Optical Solutions: SR8 and AOC for AI Clusters

AI interconnect solutions demand both speed and variety. The LinkX philosophy encompasses everything from short-reach copper cables to long-haul optical solutions. Two crucial technologies for high-density cluster connections are the Short-Reach (SR8) standard and Active Optical Cables (AOCs).

The Role of Short-Reach (SR8) MPO-16 Optics

AI superclusters heavily rely on Short-Reach (SR8) optical transceivers for intra-rack or adjacent-rack connections. These links typically span short distances (50 to 100 meters).

- Parallel Optics: SR8 modules utilize parallel optical technology. They transmit data across 16 optical fibers. This includes 8 for transmitting (TX) and 8 for receiving (RX), all housed within a single MPO-16 connector.

- 800G Implementation: In the 800G era, each fiber lane carries a 100Gb/s PAM4 signal. The 8 TX and 8 RX lanes collectively achieve 8 x 100G bidirectional 800G throughput. This technical path is simple and offers low latency. It is the ideal solution for architecting massive, tightly integrated clusters like DGX SuperPODs.

- MPO-16 Connectors: The MPO-16 connector is the physical standard for 400G/800G SR8 modules. It demands meticulous attention to fiber end-face cleanliness. It also requires extremely high installation precision. This ensures perfect alignment of all 16 fibers, minimizing insertion loss and maximizing signal integrity.

Active Optical Cable (AOC) Applications

Active Optical Cables (AOCs) are essential components in data center interconnects. They simplify deployment by integrating the optical transceivers directly into the cable assembly. This results in a turn-key, plug-and-play solution. It bypasses the complexities of separate fiber routing and connector cleaning. These are the preferred solutions for mid-range connections, often spanning up to 70 meters or more. You can explore the compatible AOC QSFP-DD (400G Series) solutions to understand the practical applications in detail.

- The DAC Replacement: Beyond short distances (typically 5 meters), passive copper cables (DACs) become impractical. This is due to severe signal attenuation, excessive bulk, and weight. AOCs offer a lightweight, flexible alternative. They can reliably cover mid-range distances while maintaining pristine signal quality.

- Preferred for Mid-Range Interconnects: Solutions like the 400G QSFP-DD AOC or 800G OSFP AOC are commonly used. They link servers to Top-of-Rack (ToR) switches. They are also used for switch-to-switch cascading within a column or row of racks. They are core enablers for realizing the high-density, scalable network topologies necessary for modern AI research.

Optimizing Procurement: Sourcing Compatible 800G Interconnects

For data center procurement managers and network engineers, the challenge extends beyond technical comprehension. It involves strategic sourcing. This means securing certified performance while optimizing the supply chain and controlling massive capital expenditure. The long lead times and high costs typically associated with OEM LinkX products often constrain the speed and scale of deployment.

Guaranteeing Compatibility Through Rigorous Testing

A successful, high-value interconnect alternative must achieve exact EEPROM compatibility and performance matching with NVIDIA systems. This process is not simply about copying an OEM code. It is a complex engineering endeavor. It requires precise simulation of the NVIDIA interconnect’s electrical behavior and thermal management profile.

Supply chain reliability is crucial for large-scale AI deployment. PHILISUN offers a high-performance, validated alternative to NVIDIA LinkX modules. Our 400G and 800G OSFP/QSFP-DD transceivers, including advanced SR8 and AOC solutions, are rigorously tested for seamless, plug-and-play compatibility with ConnectX and DGX systems. This delivers superior cost efficiency and accelerates your cluster deployment.

The PHILISUN Value Proposition

PHILISUN’s expertise in high-speed optical interconnects enables the delivery of solutions that meet the stringent demands of NVIDIA LinkX. This offers a more flexible and cost-effective procurement path. You can immediately browse PHILISUN’s QSFP-DD/OSFP 400G Series and see our high-density QSFP-DD 800G Series to compare specifications and offerings.

- Comprehensive Compatibility Validation: Every batch of modules undergoes mandatory interoperability testing on live NVIDIA hardware before shipment. This verification process includes a full audit of the EEPROM coding, CDR lock times, transmitter power consumption, and receiver sensitivity. It ensures the module is correctly recognized and accepted by ConnectX cards and Spectrum switches.

- Supply Chain Agility and TCO Reduction: PHILISUN leverages an independent and optimized optical supply chain. This allows us to provide significantly more competitive pricing and shorter lead times compared to OEM options. This agility is vital for hyperscale clouds and large AI labs that need to expand or iterate their compute infrastructure rapidly. By offering a high-quality alternative, PHILISUN dramatically improves the Total Cost of Ownership (TCO) for petascale clusters.

- In-Depth Technical Support: The deployment of 800G fabrics is complex. PHILISUN provides specialized technical consultation. This assists customers in selecting the ideal AOC, DAC, or transceiver model for their specific cluster topology, distance requirements, and cooling capabilities.

Thermal and Power Efficiency: A Hidden Cost Factor

In the 800G domain, power consumption and thermal management have become primary bottlenecks. These issues restrict data center density. A single 800G OSFP module can draw 18 Watts or more.

- Thermal Optimization: High-quality third-party transceivers are designed with a specific focus on heat dissipation. They strive to reduce operating temperatures while maintaining peak performance. This is achieved through efficient internal circuit design and optimized heatsink construction. This optimization not only extends the module’s lifespan. Critically, it minimizes the load on the data center’s cooling infrastructure, contributing to substantial operational cost savings.

- Power Budget Analysis: Procurement decisions must include a detailed assessment of module power consumption. PHILISUN is committed to delivering solutions with power ratings that meet or exceed OEM specifications. This mitigates risk to the rack power and cooling budgets.

Conclusion

Building the next generation of AI supercomputers demands an interconnect infrastructure founded on the highest standards of reliability and performance. NVIDIA LinkX sets these standards. A smart procurement strategy lies in identifying partners who can meet every technical compliance requirement while offering superior supply chain value. From the precision of EEPROM coding to the thermal efficiency of 800G OSFP/QSFP-DD, every detail is instrumental to the cluster’s final performance benchmark.

Ready to procure high-quality, LinkX-compatible interconnects for your next cluster build?

Contact the PHILISUN technical sales team today for immediate consultation and competitive quotes on your 400G and 800G requirements.