NVIDIA Spectrum-XGS 800G: Which Optical Interconnects Are Essential for Giga-Scale AI?

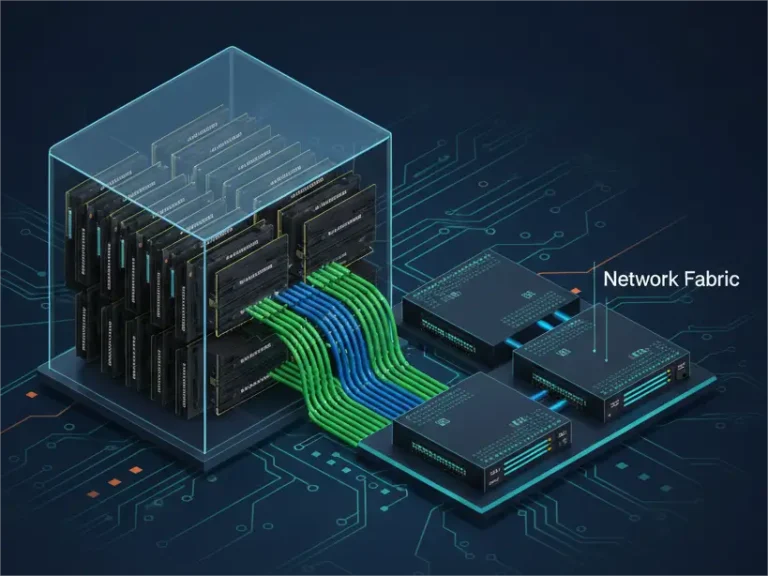

The Hard Truth About Building Giga-Scale AI Fabrics

If you’re architecting AI infrastructure today, you already know the painful reality: GPU performance is no longer the main bottleneck—interconnect throughput is. As models scale from billions to trillions of parameters and clusters grow into multi-building “AI super-factories,” traditional Ethernet fabrics collapse under congestion, packet drops, and unpredictable latency.

This is exactly why NVIDIA launched Spectrum-XGS, the Ethernet platform designed to build distributed, unified AI data centers at giga-scale.

(Reference anchor text inserted: “NVIDIA introduces Spectrum-XGS Ethernet to connect distributed data centers into giga-scale AI super-factories.”)

But the switch is only half the story. The real performance of Spectrum-XGS depends on the optical interconnects you choose—800G modules, MPO-16 cabling, DAC/AOC strategy, and long-haul DCI optics.

This article provides a complete engineering guide on which interconnects are essential for Spectrum-XGS, how to avoid costly deployment mistakes.

The New Interconnect Challenge: From Single Cluster to Giga-Scale

Defining NVIDIA’s Spectrum-XGS Vision

Spectrum-XGS represents a shift from isolated GPU clusters to continent-scale AI grids—multiple data centers linked as one training fabric.

It enables:

- Unified AI acceleration across physical locations.

- Multi-site redundancy for high-availability training.

- Congestion-free RoCE networking across large fabrics.

This architecture fundamentally changes optical interconnect requirements.

Why Standard High-Speed Ethernet Falls Short

Traditional 400G or 800G Ethernet fabrics suffer from:

- Unpredictable congestion collapse.

- High PFC pause storms.

- Non-deterministic latency under load.

- Sensitivity to tiny amounts of packet loss.

Spectrum-XGS solves these issues through InfiniBand-derived technologies:

- Adaptive routing.

- Shared Buffer Congestion Control (SBCC).

- Advanced telemetry and flow tracking.

But these features only work if the optical layer is flawlessly engineered.

Spectrum-XGS: InfiniBand Intelligence Meets Ethernet

Spectrum-XGS is Ethernet at the control plane, but InfiniBand in behavior—meaning:

- Interconnect loss budgets are tighter.

- Jitter sensitivity is higher.

- Deterministic latency requires clean optics.

- Cabling mistakes have larger performance consequences.

This is why selecting the correct interconnects is no longer optional.

Expertise: Mastering the 800G Physical Layer (The Fabric Core)

The Great 800G Debate: OSFP vs QSFP-DD in Spectrum-XGS

You must choose the correct 800G form factor for switches and links.

OSFP for Maximum Thermal Headroom

- Larger body.

- Better heat dissipation.

- Supports future 1.6T optical modules.

- Ideal for HPC-scale Spectrum-XGS deployments.

QSFP-DD for Backward Compatibility

- Same size as QSFP28/QSFP56.

- Enables reuse of legacy hardware.

- Higher temperatures under heavy 800G loads.

For AI clusters > 1,000 GPUs, OSFP is generally preferred, especially for SR8 / DR8 modules.

TCO Analysis: Power & Heat of 800G Modules

Different module types impact rack-level heat and cooling budgets.

| 800G Module Type | Typical Power | Notes |

| 800G DR8 | 14–16 W | Baseline module for short-reach SM links |

| 800G 2×FR4 | 18–20 W | Higher due to DSP complexity |

| 800G 2×DR4 | 16–18 W | Balanced choice for mid-reach links |

Watts per gigabit (W/Gb) becomes the key metric for AI facilities with tens of thousands of modules. OSFP handles thermal loads better, making it the top choice for DR8-heavy fabrics.

800G Cabling Choice: DAC vs AOC

A simple rule: DAC: 0–2 m and AOC: 2–30 m.

DAC Advantages

- Lowest cost.

- Zero optical engines.

- Ideal for switch-to-switch in the same rack.

AOC Advantages

- Lightweight and flexible.

- No EMI concerns.

- Lower BER vs passive copper at higher speeds.

Enabling Distributed AI with Optical Resilience

The Distributed DCI Problem in Spectrum-XGS

When you connect Spectrum-XGS fabrics across buildings, latency becomes the enemy. Even 1–2 ms added latency can collapse:

- RDMA efficiency.

- Collective communication.

- Tensor parallelism in LLM training.

This is why DCI optics must be chosen precisely.

Choosing the Right Optics for DCI: LR4 vs ZR4

400G-LR4 (up to 10 km)

- Best for campus-scale AI fabric interconnects.

400G-ZR4 / ZR+ (40–120 km)

- Required for: Metro AI fabrics, Multi-data-center training campuses, and low-latency coherent AI backbones.

PHILISUN’s long-reach optical series: https://www.philisun.com/product/optical-transceiver-series/

Importance of Low-Latency Fiber: Beyond Standard OS2

Spectrum-XGS requires extremely tight optical budgets. Ultra-Low-Loss (ULL) OS2 fiber is strongly recommended to maintain BER performance across distance.

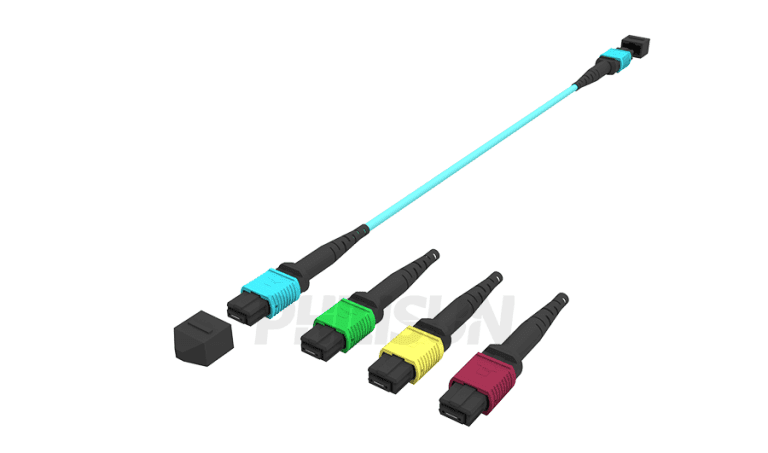

Trustworthiness: MPO-16 Cabling and Certification Standards

MPO-16: The New Standard for 800G

800G SR8 / DR8 requires:

- 16-fiber MPO connectors.

- Correct polarity (Method B or Universal).

- Low insertion loss (<0.75 dB recommended).

MPO-12 cannot support 800G parallel optics.

The Role of Strict QA Testing

Large AI fabrics demand connector-level reliability:

- IL/RL testing.

- Temperature/humidity stress.

- 100% polarity validation.

- End-face inspection.

PHILISUN performs these tests on every MPO-16 fiber assembly.

Compliance with IEEE & OIF

PHILISUN optics and cabling meet:

- IEEE 802.3ck / 802.3cu.

- OIF-400G / OIF-800G.

- MPO standards: IEC 61754-7.

This ensures cross-vendor interoperability.

Conclusion: Partnering for Interconnect Supremacy

Five Critical Interconnect Decisions for XGS Architects

To build a Spectrum-XGS fabric that delivers deterministic performance:

- Choose the right 800G form factor (OSFP vs QSFP-DD).

- Optimize module power & cooling budgets.

- Use DAC/AOC correctly for short-reach links.

- Select LR4 or ZR/ZR4 based on DCI distance.

- Deploy MPO-16 cabling with strict QA control.

Poor decisions lead to thermal throttling, packet loss, and multi-million-dollar GPU underutilization.

PHILISUN provides everything required for Spectrum-XGS deployments, including 800G SR8 / DR8 optical transceivers, 800G-ready MPO-16 cabling systems, AOC/DAC for rack-level connections, and so on.

If you want deterministic AI-fabric performance across buildings or regions, PHILISUN delivers the optical foundation Spectrum-XGS requires.

FAQ

Q1: Why is 800G necessary for the Spectrum-XGS architecture?

A1: Spectrum-XGS is designed to eliminate network bottlenecks for massive AI workloads. 800G per port is necessary to aggregate the bandwidth from thousands of GPUs and DPUs, ensuring the network can keep up with the compute engine without suffering from congestion or latency.

Q2: Which form factor is better for 800G: OSFP or QSFP-DD?

A2: Both support 800G. OSFP is often chosen for new deployments due to its larger size, which allows for better thermal performance and higher power draw for complex optics. QSFP-DD is preferred where port density and backward compatibility with existing 400G and 100G gear are priorities.

Q3: How does the MPO-16 connector help with 800G density?

A3: MPO-16 is a higher-density connector than the traditional MPO-12. 800G often uses 16 fibers (e.g., DR8). MPO-16 allows the necessary 16 fibers to be housed in a single connection, reducing the total cable bulk and simplifying fiber management in extremely dense racks.

Q4: Can I use standard 400G LR4 optics to connect distributed Super Factories?

A4: Only for very short links (typically under 10km). For connecting geographically separate data centers (Distributed DCI), you need 400G-ZR4 coherent optics. ZR4 provides the necessary distance (up to 80km) and resilience required to unify the Giga-Scale AI Super Factory over long-haul fiber.

Q5: How does TCO relate to the choice between AOC and DAC?

A5: DAC (Direct Attach Copper) has the lowest unit cost and zero power consumption, making it ideal for short-reach, high-volume needs inside a rack. AOC (Active Optical Cable) is more expensive but uses less material and is lighter, reducing cooling costs and improving airflow for longer links (up to 50m).